Decentralized Actor Scheduling and Reference-based Storage in Xorbits: a Native Scalable Data Science Engine

Weizheng Lu, Chao Hui, Yunhai Wang*, Feng Zhang, Yueguo Chen, Bao Liu, Chengjie Li, Zhaoxin WuAbstract

Data science pipelines consist of data preprocessing and transformation, and a typical pipeline comprises a series of operators,such as DataFrame fltering and groupby. As practitioners seektools to handle larger-scale data while maintaining APIs compat-ible with popular single-machine libraries (e.g., pandas), scalingsuch a pipeline requires efcient distribution of decomposed tasksacross the cluster and fne-grained, key-level intermediate storagemanagement, two challenges that existing systems have not effectively addressed. Motivated by the requirements of scaling diversedata science applications, we present the design and implementation of Xorbits, a native scalable data science engine built onour decentralized actor model, Xoscar. Our actor model can eliminate dependency on a global scheduler and enable fast actor taskscheduling.We also provide reference-based distributed storagewith unifed access across heterogeneous memory resources. Ourevaluation demonstrates that Xorbits achieves up to 3.22x speedupon 3 machine learning pipelines and 22 data analysis workloadscompared to state-of-the-art solutions. Xorbits is available on PyPlwith nearly 1k daily downloads and has been successfully deployedin production environments.

Links

- The source code, data, and/or other artifacts have been made available at https://github.com/xorbitsai/xorbits, https://github.com/xorbitsai/xoscar.

Empirical Study & Observation

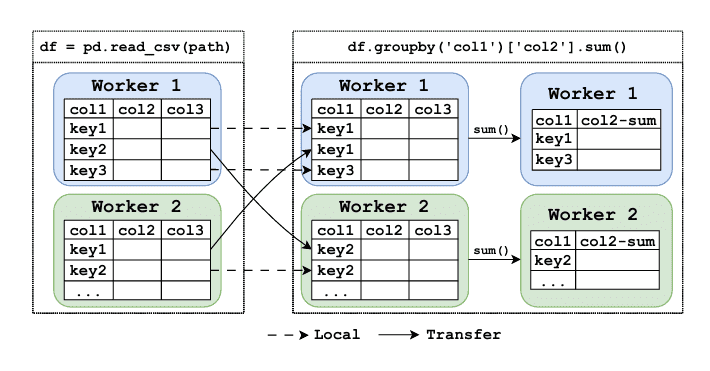

Figure 1: A simple data science pipeline in a distributed environment: loading data followed by a column-wise groupby. Note that this diagram provides a simplifed illustration and does not show the complete MapReduce workflow.

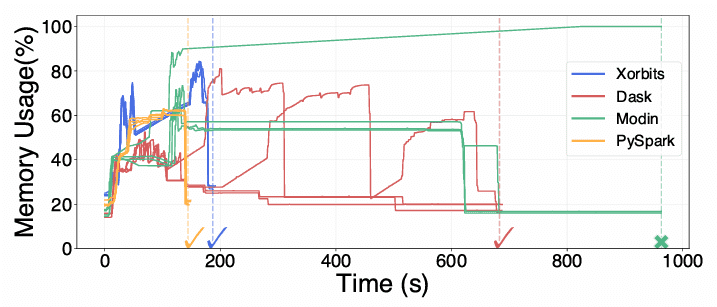

Figure 2: Memory usage during distributed DataFrame groupby on four workers using Xorbits, Dask, PySpark, and Modin on Ray. Each line represents one worker's memory usage over time.

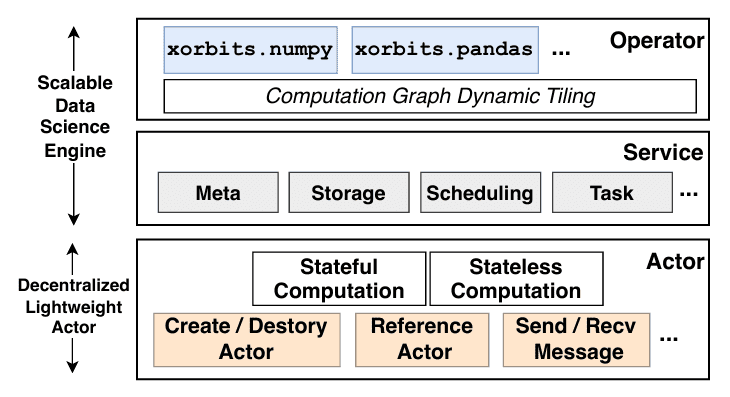

LIGHTWEIGHT ACTOR SCHEDULING

Figure 3: Xorbits system architecture: divided into the decentralized decentralized actor layer and the scalable data science engine layer.

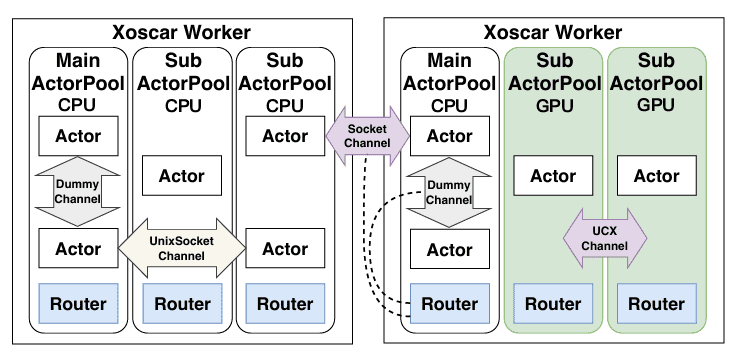

Figure 4: Xoscar architecture.

Reference-Based distrbuted Storage

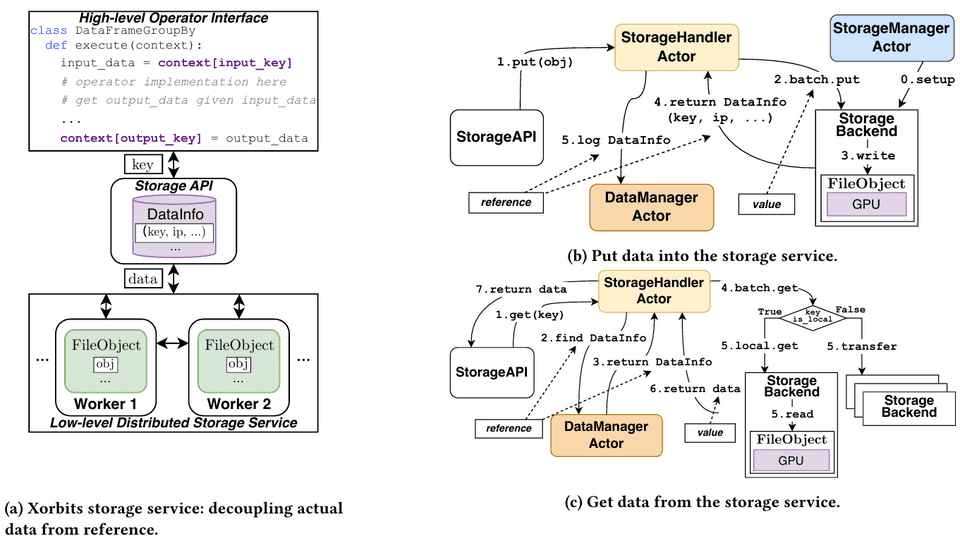

Figure 5: Xorbits storage: from operator implementation to distributed data access.

Evaluation

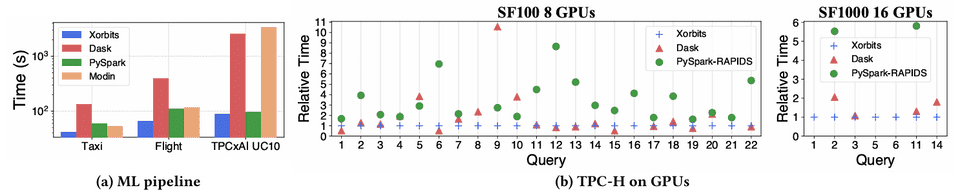

Figure 6: End-to-end workloads performance.

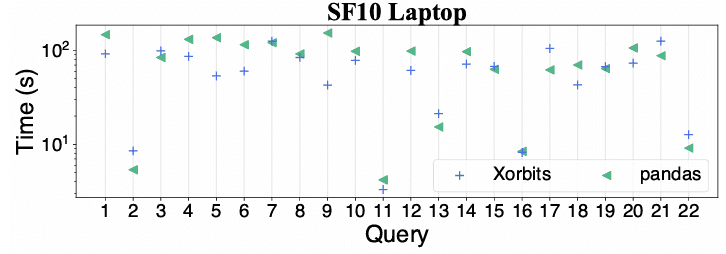

Figure 7: Compare Xorbits with underlying pandas to test potential overhead introduced by Xorbits.

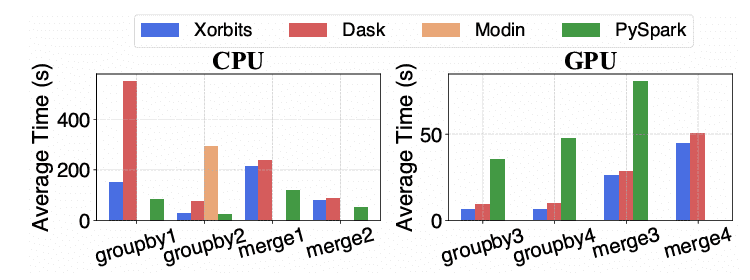

Figure 8: Experiment on two shuffle-intensive DataFrame operators: groupby and merge on CPU(Left) and GPU(Right).

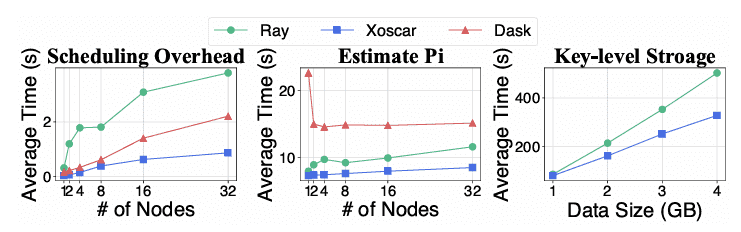

Figure 9: Micro-benchmarks: scheduling aetors (Left), approximating π (Middle), and key-level data storage (Right).

Ackonwledgments

This work is supported by the grants of the National Key R&D Program of China under Grant 2022ZD0160805, NSFC (No.62132017and No.U2436209), the Shandong Provincial Natural Science Foun-dation (No.ZQ2022jQ32), the Beijing Natural Science Foundation(L247027), the Fundamental Research Funds for the Central Universities, and the Research Funds of Renmin University of China(24XNK22). Yunhai Wang is the corresponding author of this paper.